The Windows versions of NNSuperResolution have been in the wild for about a month now! We are very excited about this since it’s something a lot of people have been asking about. It’s also worth mentioning that it’s Nuke Indie compatible as well. You can download a copy directly from the downloads page to test it out. If you want to test it out without the added watermark/noise, please request a trial license. It’s free and quick, and will let you run the plugin unrestricted for 10 days. If you need more time to evaluate it, please get in contact using the comments field on the trial request page and we’ll organise something suitable.

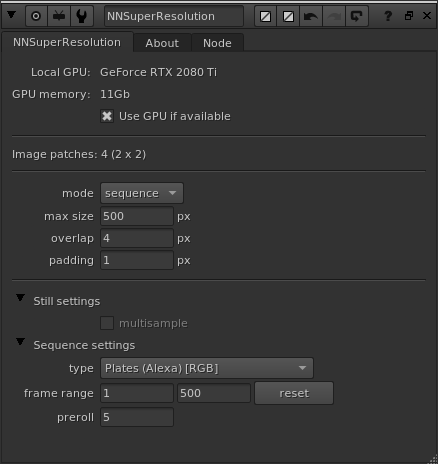

The next couple of things we are looking into for NNSuperResolution are getting the “still mode” able to upscale CG (RGBA), similarly to the “sequence mode” which is already capable of this. We have also started training variants of the neural networks that are able to upscale only 2x. Currently all the upscaling with NNSuperResolution is 4x, but it’s not always that you need to go that large. Maybe you already got full HD material (1080p), and want it remastered as UHD (4K), then 2x would be good to have available directly as an option.

We are now featured on the official Plug-ins for Nuke page at Foundry’s website. 🙂

We are also working on producing a demo video to show the plugin in action directly in Nuke. While the best way is always to try things out for yourself using your own material, it can also be nice to see the thing in action on YouTube.

Stay tuned!

Cheers, David