Usage of the node

The NNFlowVector node can produce normal motion vectors that are compatible with how Nuke handles motion vectors, i.e. they become available in the “motion” layer and also as a subset in the “forward” and “backward” layers. These can be used in native Nuke nodes such as VectorBlur, among other third party tools from Nukepedia (www.nukepedia.com). The motion vector output is available, without limits, in the free version of NNFlowVector.

Worth mentioning is that you do want to set the “interleave” option in the Write node to “channels, layers and views” when pre-comping out motion vectors (this is Nuke’s default settings). This way the “forward” and “backward” layers are combined automatically by Nuke to be represented in the “motion” layer as well. If you write out the files with “interleave” set to for example “channels” only, then the “forward” and “backwards” layers become written out in separate parts in the resulting multi-part EXR sequence. This results in Nuke failing to recombine them correctly to the “motion” layer when read in again. You can easily see if this has happened by checking if the “motion_extra” layer has been created instead of the standard “motion”.

SmartVector, and the tools that come bundled with it in NukeX, is truly a brilliant invention that opens of for a lot of powerful compositing techniques. The VectorDistort node is the most obvious one which makes it possible to track on images, or image sequences, onto moving and deforming objects in plates. This is made possible by the more complex set of motion vectors called smart vectors. While NukeX is able to produce these smart vectors, they are quite often pretty rough which makes them not hold up for that long sequences. Basically the material you are tracking onto the plates does deteriorate over time. This is where NNFlowVector comes in by being able to produce cleaner and more stable motion vectors in the smart vector format. Hence the output of NNFlowVector is usable directly in the VectorDistort node. This is also true for other smart vector compatible nodes like VectorCornerPin and GridWarpTracker.

You have to use the licensed/paid version of NNFlowVector to be able to produce smart vectors. If you want to try this feature out first, there are free trial licenses available upon request.

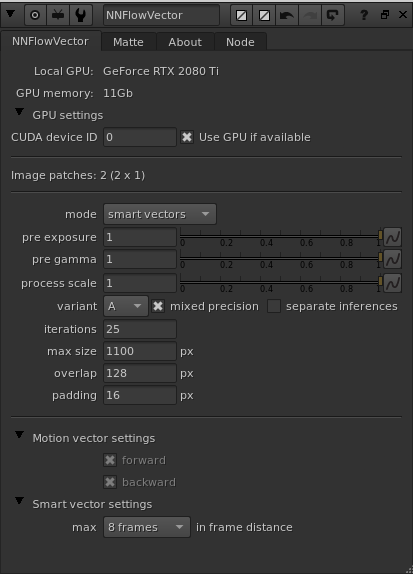

Knob reference

The knobs “max size”, “padding” and “overlap” are mostly related to being able to generate motion vectors for pretty large resolution sequences using limited VRAM on the graphics card. The neural network requires pretty large amounts of memory even for small resolution input sequences. To be able to generate vectors for a full HD sequence or larger, the images most likely need to be split up into several passes/patches to fit into memory. This is all done and handled transparently “under the hood” so the artist can focus on more important things. You might need to tweak the settings though depending on the use case and available hardware on your workstation.

mode

You can switch between generating normal “motion vectors”, and the more Nuke specific “smart vectors”, depending on your need and what nodes you are going to use the motion vectors with.

pre exposure

This is a normal exposure grade correction that is applied before the neural network processing. It is here for artist convenience since it’s worth playing a bit with the exposure balance to optimise your output.

pre gamma

This is a normal gamma grade correction that is applied before the neural network processing. It is here for artist convenience since it’s worth playing a bit with the gamma balance to optimise your output.

process scale

This is a new knob since v1.5.0. Defines in what resolution to calculate the vectors in. The default value of 1.0 means it’s going to calculate the vectors in the same resolution as the input images (basically this ignores doing a pre-scale). A value of 0.5 means half res, and a value of 2.0 means double res. It’s good being able to alter the process resolution because of rendering speed, memory use and produced details. For example it’s quite often enough to calculate the vectors of UHD material in full HD instead (as in setting the process scale to 0.5). The vectors are always automatically reformatted back to the input resolution and scaled accordingly, so they are directly usable together with the input material. Please have a play with your particular material to find the most optimal process scale setting.

variant

This is a new knob since v1.5.0. The variant, A to H, sets what neural network training variant to use. As of NNFlowVector v2.1.0, you also got a new transformer based model available using the “AA” variant. This new model does often produce even better vectors for tricky image sequences compared to the normal A to H variants. It will however use more VRAM on your GPU to generate the vectors. To be able to fit the model and processing you might need to lower the “max size” knob value. You can also test enabling the new knob “separate inferences”, see below for more info.

We provide the NNFlowVector plugin with 9 different training variants made with slightly different optimizations and training settings. The different alternatives does produce pretty similar results, but a particular one could produce a favourable result with your particular material. If you got the processing power and time, you could try them all out to really get the best possible result. If you are unsure and just want to quickly get a good result, please use the default variant of “A”, or try the new “AA” variant.

mixed precision

This is a new knob since v1.5.0. When this is “on” (which is default) the plugin will try to use mixed precision on the GPU for calculations. What this means is that for certain supported calculations, the GPU will process data using half floats (16 bit) instead of normal full floats (32 bit). This results in a bit less VRAM usage and faster processing, for the little cost of a slightly less accurate result. You usually won’t notice the difference in quality at all, but the resulting processing speed can be, for example, 15% faster. The actual speed difference depends on your exact GPU model.

separate inferences

This is a new knob since v2.1.0. When “separate inferences” is on, the calculation of the forward motion vectors and the backward motion vectors are run as separate inference/ calculation passes through the neural network. This takes a little bit more time, but uses less GPU memory which (dependent on your hardware) might be a good thing. The default (having this checkbox off) is to calculate the forward and backward vectors in parallel using one inference run through the neural network (a touch faster, but uses more memory).

iterations

Defines how many refinement iterations the solve algorithm will do. This knob has been re- implemented as a free typed integer as of v.1.5.0 (before that it was a static drop down menu). The default has been increased to 25 (it was earlier set to 15). You can go really high on the iterations if you like, like 100 or more, but that doesn’t necessarily produce a better result. Please have a play with your particular material to find the most optimal iterations setting.

Please note that this knob doesn’t affect the new “AA” variant, hence it’s greyed out when that variant is used.

max size

Max size sets the maximum dimension, in one direction, that an image patch can have and is one of the most important knobs on the plugin. The default is 1100, which means that the max dimensions an input patch would be allowed to be is 1100×1100 pixels. From our experience that will use up to around 8Gb of VRAM on your graphics card (applies to the A-H variants. For the new “AA” variant, please try values in the realm of 700 instead). If you haven’t got that available and free the processing will error out with a CUDA memory error, and the node in Nuke will error in the DAG. To remedy this, and also to be able to input resolutions much higher than a single patch size, you can tweak the max size knob to adapt to your situation. You can lower it to adapt to having much less VRAM available. The plugin will split the input image into lots of smaller patches and stitch them together in the background. This will of course be slower, but it will make it possible to still run and produce much larger results. There is a text status knob above the “max size” knob (in between the two dividers), that will let you know how many image patches the plugin will run to create the final motion vector output.

Since motion vectors do describe local and global movements in plates, they are pretty sensitive to what image regions are part of the algorithm solve. What this mean is that the more it sees the better result it will be able to produce. Keeping the max size as high as you can, will produce better motion vectors. It’s worth to mention that this is much more sensitive in a plugin like this compared to for example NNSuperResolution.

overlap

Since the plugin will run multiple patches through the neural network, there will be lots of edges of image patches present. The edges doesn’t get as satisfying results as a bit further into an image from the edge. Because of this, all these multiple patches that get processed are done so with some spatial overlap. The overlap knob value sets the number of pixels of the patches’ overlap. The default value is 128, which is usually a good start.

padding

The padding is very connected to the overlap above. While the overlap sets the total amount of pixels the patches overlap, the padding then reduces the actual cross fading area where the patches are blended to not use the very edge pixels at all. The padding is also specified in pixels, and the default value is 16. This way of blending all patches together has proven pretty successful in our own testing.

If you are getting CUDA processing errors, please try to process the same material but turning off mixed precision.

motion vector mode: forward and backward

Motion vectors describe the local movement of different parts of the plate from the current frame to the next frame (forward), and from the current frame to the frame before (backward). Different tools and algorithms have different needs for what to use in regards to motion vectors, some work with just forward vectors and some need both forward and backward vectors. You have the option of which ones you want to calculate, but we recommend to always calculate and save both.

Worth noting is that all the NNFlowVector Util nodes that got motion vectors as input (please see below in this document) do require both forward and backward vectors.

smart vector mode: frame distance

This knob has been re-implemented as a drop down menu as of v1.5.0 (it was earlier a bunch of checkboxes which made it way to confusing). The drop down menu specifies the highest value of “frame distance” that you can use in a VectorDistort node when using the rendered SmartVector compatible output of NNFlowVector. If you want to have all the possible options available in a VectorDistort node, you should set the frame distance to 64. This will require a lot more rendering time though. If you know that you will only use up to a frame distance of 2, for example, you can optimise the rendering of your smart vector compatible output a lot by only rendering the needed motion layers for that particular setting (and hence set the max frame distance in NNFlowVector to the same setting of 2).

You have to use the licensed/paid version of NNFlowVector to be able to produce smart vectors. If you want to try this feature out first, please request a free trial license.

CUDA device ID

This is a new knob since v1.5.0, and it’s only present if you’ve installed a GPU version of the plugin. This knob specifies what GPU to use in the system by its CUDA device ID. It’s only relevant if you got multiple GPUs installed in the system. The default value is 0, which is the default CUDA processing device, usually the fastest/most modern GPU installed. Please refer to the output of running the command “nvidia-smi” in a terminal for retrieving the info of the specific GPU device IDs you have assigned to your GPUs in your particular system.

Use GPU if available

There is another knob at the top of the plugin called “Use GPU if available”, and it’s “on” by default (recommended). This knob is only present if you’ve installed a GPU version of the plugin. This knob is not changing the motion vector output, but rather how the result is calculated. If it is “on” the algorithm will run on the GPU hardware of the workstation, and if it’s “off” the algorithm will run on the normal CPU of the workstation. If the plugin can’t detect a CUDA compatible GPU, this knob will automatically be disabled/greyed out. This knob is similar to the one you’ll find in Foundry’s own GPU accelerated plugins like for example the ZDefocus node.

We highly recommend always having this knob “on”, since the algorithm will run A LOT faster on the GPU than on the CPU. To give you an idea of the difference, we’ve seen calculation times of the same input image be around 2.0 seconds/frame using the GPU and about 34 seconds/frame on the CPU (a difference factor or 17x slower on the CPU). These numbers are just a simple example to show the vastly different processing times you will get using the GPU vs. the CPU. For speed references of processing, please download and test run the plugin on your own hardware/system.

The “Matte” tab’s knobs

process scale

This knob specifies at what resolution the matte region should be calculated in, i.e. the calculation of the inpainting and enhancement of the “ignored” matte region specified by the alpha channel of the matte input. This process is rather compute and memory heavy, so it’s good to keep this at a rather low ratio to not run out of CUDA memory (GPU VRAM). The default value is 0.4.

border padding

The border padding setting is only relevant if your input matte is touching any of the edges of the image. If it is, then some extra inpainting steps needs to be performed and it’s here the “border padding” is relevant. If you are getting artefacts at the image edge in the matte region, such as black bleed-ins, then try to increase/change the border padding. The default value is 64 pixels.

matte dilation

The matte dilation setting is specifying how many pixels to dilate the matte before it’s used for the inpainting and improvement neural network. Because the original matte is used to actually keymix in the calculated vectors in the matte region, this dilation setting makes the end result not use the furthest out pixels produced by the algorithms. The setting basically makes is possible to ignore the outer edge of the produced matte region which is good because it can have some noisy artefacts in some cases. Keep this value as low as possible, even set it to 0, as long as you’re not experiencing the edge artefacts. If you get them, slowly increase this setting. The default value is 2 pixels.

improvement network

This setting should pretty much always be “on”. It turns the improvement neural network on/off. If it’s set to “off” you will instead only get the pre-processing done before the vectors are fed to the neural network. This will be similar to what a classic “Inpainting” node in Nuke looks like, or a “Laplace region fill” if you’re more into math.

Knob demo video

We haven’t got a video introduction to the knobs for NNFlowVector yet, but if you are interested in the “max size”, “padding” and “overlap” knobs, please watch the following demo of NNSuperResolution since the same info applies for those knobs: