NNSuperResolution is a 2x/4x resolution upscale plugin for Foundry’s Nuke. Enjoy being able to, directly in your composite, upscale photographed/plate material and sequences to a much higher resolution. To test it out for yourself, please use the downloads page. You are also welcome to request a free trial license to run it without added watermarks/noise for a limited time period.

Here is an interactive still image before/after example:

Features

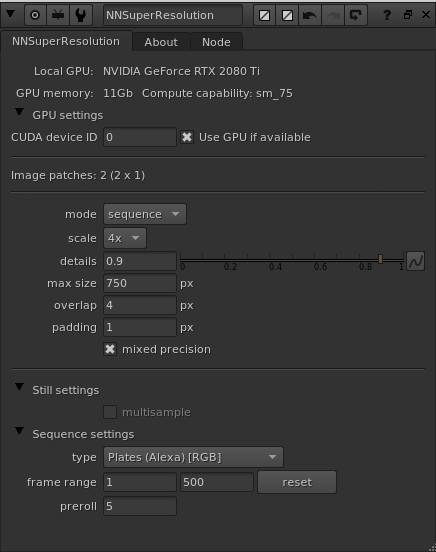

- Upscale with a factor of 2x or 4x.

- Sequence mode for upscaling image sequences like filmed source plates, final composites, CG renders etc.

- Still mode for upscaling photos and other still material like DMP patches, textures etc.

- Processes all the RGB channels of an input image (support for multiple layers, i.e. you can feed multiple layers through the plugin at the same time using Nuke’s native multichannel/layer system).

- CG upscale solution in sequence mode that is capable of upscaling RGBA images (i.e. 3D renders).

- High dynamic range upscaling.

- Native overscan handling (i.e. it handles larger bounding boxes than the image frame/format). Please note that the bounding boxes can’t be animated in sequence mode, i.e. it can’t be different from frame to frame.

- GPU accelerated using CUDA by NVIDIA (requires a NVIDIA graphics card).

- Internal stitching of several inference/image patches (to be able to upscale high resolution images without having that much VRAM available on the GPU).

- Supports Nuke 11.3 and later on Linux.

- Supports Nuke 12.0 and later on Windows.

- Supports Nuke Indie.

- Production friendly sandboxed solution that doesn’t need an internet connection to run.

The algorithm that does the upscaling is based on modern neural network technology (also commonly referred to as deep learning, machine learning or artificial intelligence). There are two modes available which uses different network solutions for best results on either stills or sequences. While the still mode produces sharper results it might stutter/flicker on some moving material. The sequence mode focuses on making the result as sharp and detailed as possible while keeping a focus on making sure the result is temporally stable . Please try out the plugin for yourself by visiting the downloads page before you buy a license.

Documentation

You always get the latest documentation as a PDF in the download zips (in the “docs” folder).

You can also access the NNSuperResolution documentation PDF directly using this link.

Video examples

The best video to start with showcasing NNSuperResolution is our demo video on YouTube (best viewed in it’s native full UHD resolution):

Here are two longer and more elaborate video examples available on YouTube as well. Be sure to view them in their native full 4K (UHD) resolution. The examples are created by using Alexa footage from Arri’s demo footage site (https://www.arri.com/en/learn-help/learn-help-camera-system/camera-sample-footage). Those native 4K Arri Alexa videos have been scaled down to 1K (25% of original resolution), and then run through NNSuperResolution to get to an upscaled 4K resolution video. The videos below are only showing the upscale step from 1K to 4K resolution using both sequence mode and still mode.

These videos are also available as high bitrate MP4 video downloads for people interested in frame stepping and doing a more in-depth comparison:

Interior: NNSuperResolution_Interior_comparison.mp4

Exterior: NNSuperResolution_Exterior_comparison.mp4

If you want to have a quick look at some crop in regions of these videos directly in the web browser, have a look at these four example pages:

#1, Interior, close up on girl’s face

#2, Interior, medium on girl in sofa

#3, Exterior, cows in the background

#4, Exterior, close up on berries in the foreground

Knob reference

To read the documentation for all the plugin’s knobs, please visit the dedicated knob reference page.

Frequently Asked Questions

We have gathered the most common questions about the plugin on a separate Frequently Asked Questions page.

Downloads

All the downloads are available on the dedicated downloads page.

Licensing

To buy a license, please visit our shop. If you want to request a time limited trial license, please use the form on the Request a trial license page.

More still examples

You got some more still examples right below. You can also visit this before & after examples page, which got a lot more still examples to browse.

Pingback:Render license support, Nuke Indie support and NNFlowVector 2.0 – Pixelmania

Pingback:New updated versions of all plugins (Linux) – Pixelmania

Pingback:Video tutorial about Pixelmania’s Nuke plugins – Pixelmania