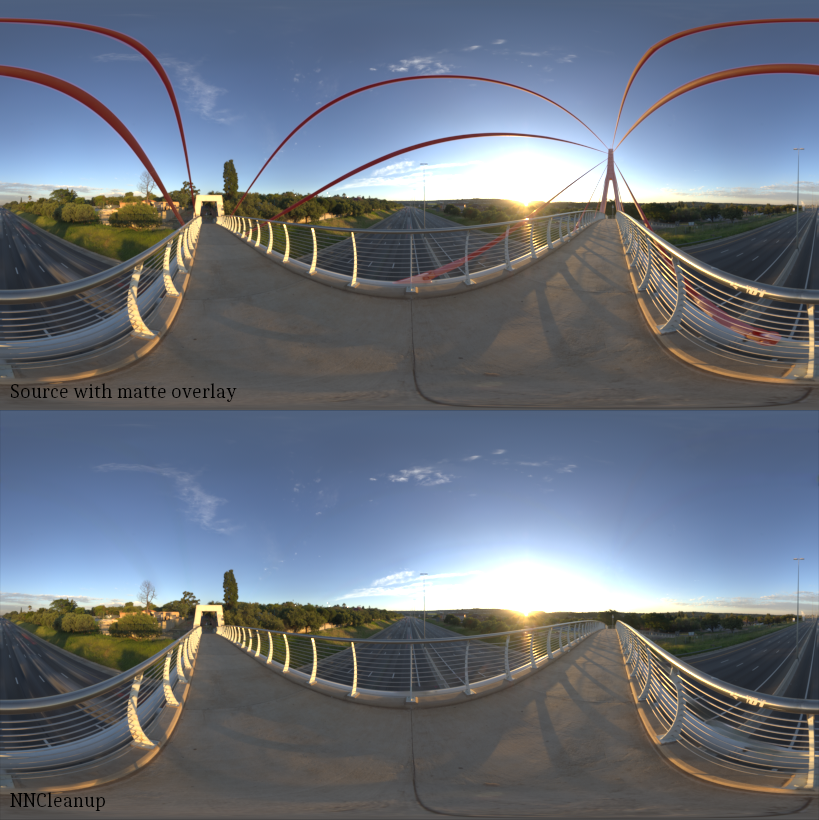

We haven’t posted any new releases for a while, so we feel it’s time to give you an update of what we’re currently up to and what’s to come. But first a little bit of a background by looking back at the year so far. The year started with the release of our third Nuke plugin NNCleanup. After getting v1.0 out in February, we quickly followed up with a couple of maintenance releases. When we felt NNCleanup was in a stable state we circled back to our most popular plugin NNFlowVector.

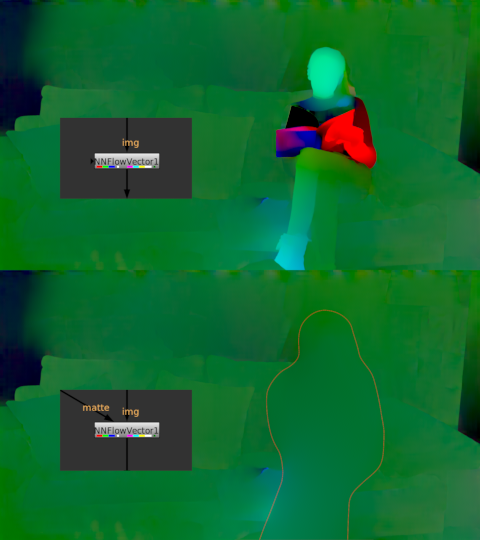

We adapted a modern and new transformer based optical flow solution to the plugin and trained the network from scratch using the same dataset as the already released solution. After a few months of work we released NNFlowVector v2.1 with the new “AA” variant. This has been welcomed by lots of users across the globe, so we are very happy with the addition.

After the release of NNFlowVector, we felt it was time to circle back to our first plugin NNSuperResolution. This is where we are spending all development resources currently. The most important thing we are doing to it is swapping out the rather old and simple optical flow solution that was baked into the sequence mode upscale solution. We are replacing it with the very successful model from our NNFlowVector plugin. With this new and very performant optical flow generator the upscale solution is able to much more reliably warp in the previous frame to the current and hence have more info to work with. The result is overall a sharper and more stable image with less artifacts. It does mean retraining the whole solution from scratch though, so it’s taking quite a lot of time. All variants need retraining; Alexa 2x, Alexa 4x, CG 2x and CG 4x and they are each a two step process taking between a week or two in processing time. We are also looking into the possibility of a CG/RGBA solution for stills, basically so you can upscale textures with an alpha channel. This solution is not working satisfactory yet though, so we are not making any promises about it just yet. The aim is to get NNSuperResolution v4.0 released in the end of the year.

After the new NNSuperResolution is released, it’s time to circle back to NNCleanup again. The big thing to attack then is a sequence mode, i.e. being able to cleanup/paint away objects in moving material. This work has already started, but it’s very complex so it will take quite a lot of development time. The aim and hope is to get NNCleanup v2.0 released, with a working sequence mode, some time during 2024.

Cheers,

David