There are always questions popping up when trying to run software on lots of different system configurations. We’ll try and gather the most common ones here and keep it up to date with new ones when we see the need for it.

Is Nuke Indie supported?

Yes, Nuke Indie is supported from v2.1.0. To have the plugin working in Nuke Indie you have to use the latest version available from Foundry of Nuke 13.x or Nuke 14.x.

How are licenses consumed?

All of Pixelmania’s licenses are per host, i.e. you can have multiple jobs using NNFlowVector on the same host and only use a single license. If you query the license server it may report multiple handles for those jobs, but it’s still only using a single license token.

As of NNFlowVector v2.1.0 we do support dedicated render licenses as well. The default behaviour when rendering using Nuke’s batch mode (i.e. command line rendering), is to check out render licenses. If it can’t find a render license, it will instead try and check out a GUI license. This behaviour is preferred if you got a node locked license, or if you got a site license. It is not preferred if you have only a few GUI licenses and then a bunch of render licenses. To stop the node from using GUI licenses when rendering, you can set the environment variable PIXELMANIA_NNFLOWVECTOR_DONT_RENDER_USING_GUI_LICENSES to the value of “1” (please see more about this in the section “2.3 Environment Variables” in the documentation).

Do you offer educational discount?

As of now, we are not offering any default educational discount. We’ve chosen to sell our licenses for a low price instead to accommodate most people and companies to afford a license anyway. But you are of course always welcome to contact us directly and state your case.

Am I able to transfer my NNFlowVector license to another machine?

Yes, please fill out the License Transfer Form.

The form should be filled in, signed, scanned and emailed to [email protected], and we will send you a new license file as soon as we can.

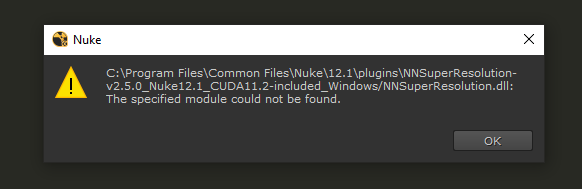

I’m getting a similar error to this when trying to run NNFlowVector in Nuke on Windows

The error message is complaining that “The specified module could not be found”, and is referring to the main plugin dll file “NNFlowVector.dll”. (The screenshot here is mentioning NNSuperResolution.dll, but the error is the same.)

To install NNFlowVector properly on Windows, you first have to add the installation folder to your NUKE_PATH. Alternatively you can do it using the python command nuke.pluginAddPath(“/full/path/to/plugin/install/folder”) in your init.py file. You do however, additionally, need to add the same installation folder path to your system’s PATH environment variable. This is needed because of Windows’ way of finding library dependencies. Please reference the “Plugin Installation” section in the “Documentation.pdf” document you got bundled when you downloaded the plugin. If you fail to do this step, you will get an error similar to the one in the screenshot above.

Terminal warning about named tensors

If you are seeing this warning printed in the terminal when using NNFlowVector: “[W TensorImpl.h:982] Warning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (function operator ())” you can safely ignore it. It will not affect the rendered output from the plugin. We are planning to get rid of the warning in a future version of NNFlowVector.

Do you really need CUDA installed to be able to use the GPU?

We understand that there are situations where you might not be able to install CUDA on the workstation you want to run NNFlowVector on, not having administrative permissions could be one such reason. There are ways around this since what the plugin is actually needing are a bunch of dynamic libraries from the NVIDIA CUDA toolkit and the NVIDIA cuDNN toolkit. You can download these toolkits from NVIDIA and simply copy these library files to the same directory as the installed plugin, and it will find them when needed. To make this easier for you we also provide downloads of NNFlowVector with these needed NVIDIA libraries bundled into the same zip archive. You can then directly install the whole content of the zip into a NUKE_PATH of your choice and things will just work.

For reference, this is a list of the library files that you need for running the CUDA10.1 compatible version of NNFlowVector:

- libcublasLt.so.10

- libcublas.so.10

- libcudart.so.10.1

- libcufft.so.10

- libcurand.so.10

- libcusolver.so.10

- libcusparse.so.10

- libnvrtc-builtins.so

- libnvrtc.so.10.1

- libnvToolsExt.so.1

There might be other versions of the files above for other CUDA toolkit versions, but the base names should be the same.

You can download the CUDA toolkit from NVIDIA’s website here:

https://developer.nvidia.com/cuda-downloads

You also need these files from the NVIDIA cuDNN package:

- libcudnn_cnn_infer.so.8

- libcudnn_ops_infer.so.8

- libcudnn.so.8

You can download the cuDNN package from NVIDIA’s website here:

https://developer.nvidia.com/cudnn

You do, always, need a good and modern version of the NVIDIA graphics driver installed and working to be able to use your NVIDIA GPU.

You can download the latest graphics drivers from NVIDIA’s website here:

https://www.nvidia.com/Download/index.aspx

What NVIDIA graphic cards/architectures are supported?

The currently supported CUDA architectures are the following compute capabilities (see https://en.wikipedia.org/wiki/CUDA):

- 3.5 (Kepler)

- 5.0 (Maxwell)

- 5.2 (Maxwell)

- 6.0 (Pascal)

- 6.1 (Pascal)

- 7.0 (Volta)

- 7.5 (Turing)

- 8.0 (Ampere)*

- 8.6 (Ampere)*

- 8.9 (Ada Lovelace)**

*The Ampere architecture, i.e. the NVIDIA RTX30xx series of cards, works with both CUDA variants of NNFlowVector (CUDA10.1 or CUDA11.2). The CUDA11.2 versions got native support and will work directly. The CUDA10.1 versions will need to compile the CUDA kernels from the PTX code. Please see more info above in the environment variables section.

** The RTX40xx series of cards will also work, but will have to rely on the JIT compilation of the PTX code for now, i.e. there is no native support just yet. This will change in the future, when we are able to release a version compiled against CUDA 11.8.

How do I find my machine’s HostID?

If you need information of how to retrieve your Host ID, for either buying licenses or request free trial licenses, please visit the License Documentation page.

Why is the plugin crashing directly at creation time using my rather old computer?

Our plugins are using machine learning libraries that are compiled with AVX and AVX2 instructions for performance reasons. You can read more about AVX/AVX2 on the Wikipedia page: https://en.wikipedia.org/wiki/Advanced_Vector_Extensions

There you can also get a list of what CPUs that do support it. In broad strokes CPUs from 2013 and later should support these instruction sets. Please note that we are not using AVX512 instructions.

Why is the plugin so large?

This is a result of including a lot of needed static libraries for neural network processing, and also for supporting lots of different graphic cards for acceleration. The plugin could have been much smaller if all these pieces of software used were required to be installed as dependencies on the system instead. That would kind of defeat the nice ecosystem of having self contained plugins that work simply by themselves, hence the plugin need to be a rather large file.

I’m having problems running NNFlowVector in the same Nuke environment as KeenTools FaceTracker/FaceBuilder

We have noticed that trying to load both NNFlowVector and FaceTracker in the same Nuke environment makes the plugins clash with each other. The error you get is that NNFlowVector is not finding the GOMP_4.0 symbols in the libgomp.so shared library that is loaded. This is because both NNFlowVector and FaceTracker are using the libgomp.so shared library, but KeenTools have decided to ship their plugin with a version of this library. This specific library version they are shipping is a rather old version of the library. So what happens is that NNFlowVector is trying to use the version from FaceTracker but it’s expecting a newer version, and it’s then failing. The fix we have found working well is simply to delete the libgomp.so file that is shipped with KeenTools. You probably already got a newer libgomp.so file installed on your system. If that is the case both NNFlowVector and FaceTracker will pick up the newer libgomp.so shared library from the system, and since this library is backwards compatible everything will just work nicely. If you haven’t got libgomp.so installed on your system, you need to install it to run the plugins. How that is done is of course dependent on your operating system and such. On CentOS you can install it by simply running the command “yum install libgomp“.

I’m having problems running NNFlowVector in the same Nuke environment as Kognat’s Rotobot

Please make sure to match versions of both NNFlowVector and Rotobot to what exact CUDA version they have been built against. We have synced our efforts together with Kognat, and made sure that we both got compatible releases with each other, built against either CUDA10.1/cuDNN8.0.5 or CUDA11.2/cuDNN8.1.0.

I’m having problems/crashing when using NNFlowVector together with Foundry’s AIR nodes in Nuke13.x

You are highly likely using the CUDA11.2/cuDNN8.1.0 build if this is happening. This build of NNFlowVector is for modern GPUs like the RTX3080 cards, and will work fast and nice except for when you need to process NNFlowVector output with for example CopyCat nodes. If you need to support this, you have to use the CUDA10.1/cuDN8.0.5 build instead. Please see more info about this above in the environment variables for CUDA section.